How to spend less time clearing the backlog? And which forensic file format to use for the maximum acquisition speed?

Those questions are not easy to answer. But OK, challenge accepted! Let’s measure and find out!

If you’re into spoilers, jump to the “Final Results” part. If not, read on.

And if you want to dive deeper into AFF4, the history behind its creation, its compression and hashing methods, as well as its advantages over other forensic file formats, check out this episode of our “Plug, Image, Repeat” newsletter!

Setting things up

How can we compare the productivity of two different file formats? Let’s design our experiment.

We’ll need our constants and our variables.

Constants

So, what should be our constants, our ceteris paribus, our ‘other things being equal?

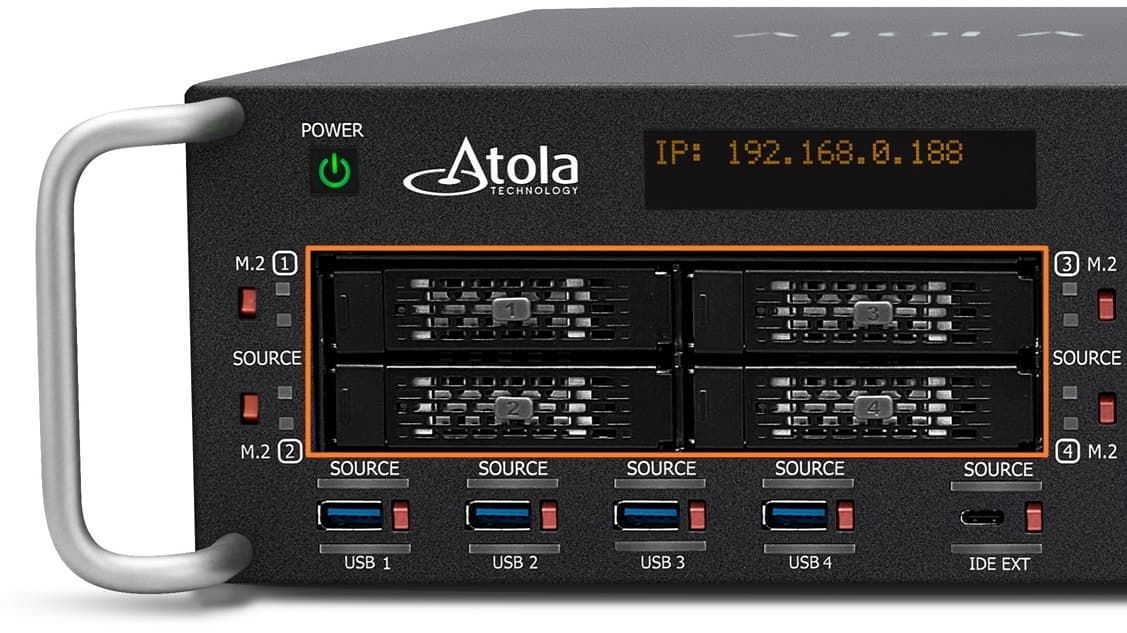

Hardware: Of course, we’ll use the latest version of our hardware imager — Atola TaskForce 2, with its extremely powerful, high-capacity components, including four new NVMe ports. And with the latest firmware version 2024.6 on board.

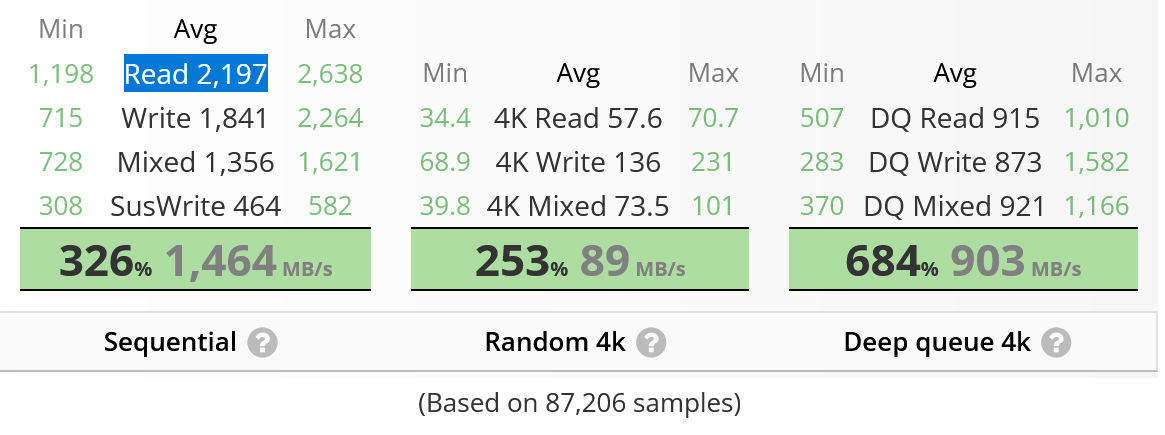

Source drive: Samsung SSD 970 EVO Plus 250GB, with an average sequential read speed of 2,197 MB/s, according to UserBenchmark. We’ll connect it directly to one of the NVMe ports of the TaskForce 2 hardware unit.

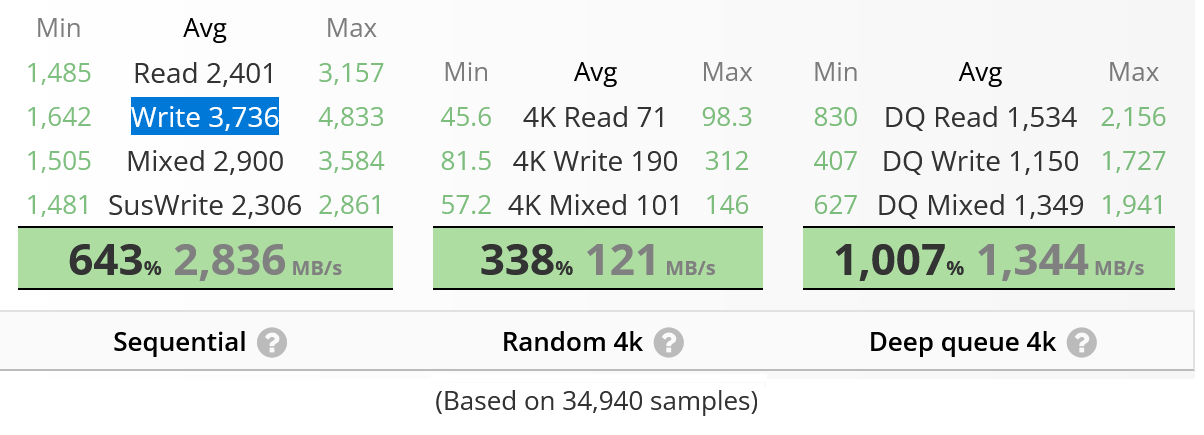

Target drive: Samsung SSD 990 PRO 1TB, with an average sequential write speed of 3,736 MB/s, according to UserBenchmark.

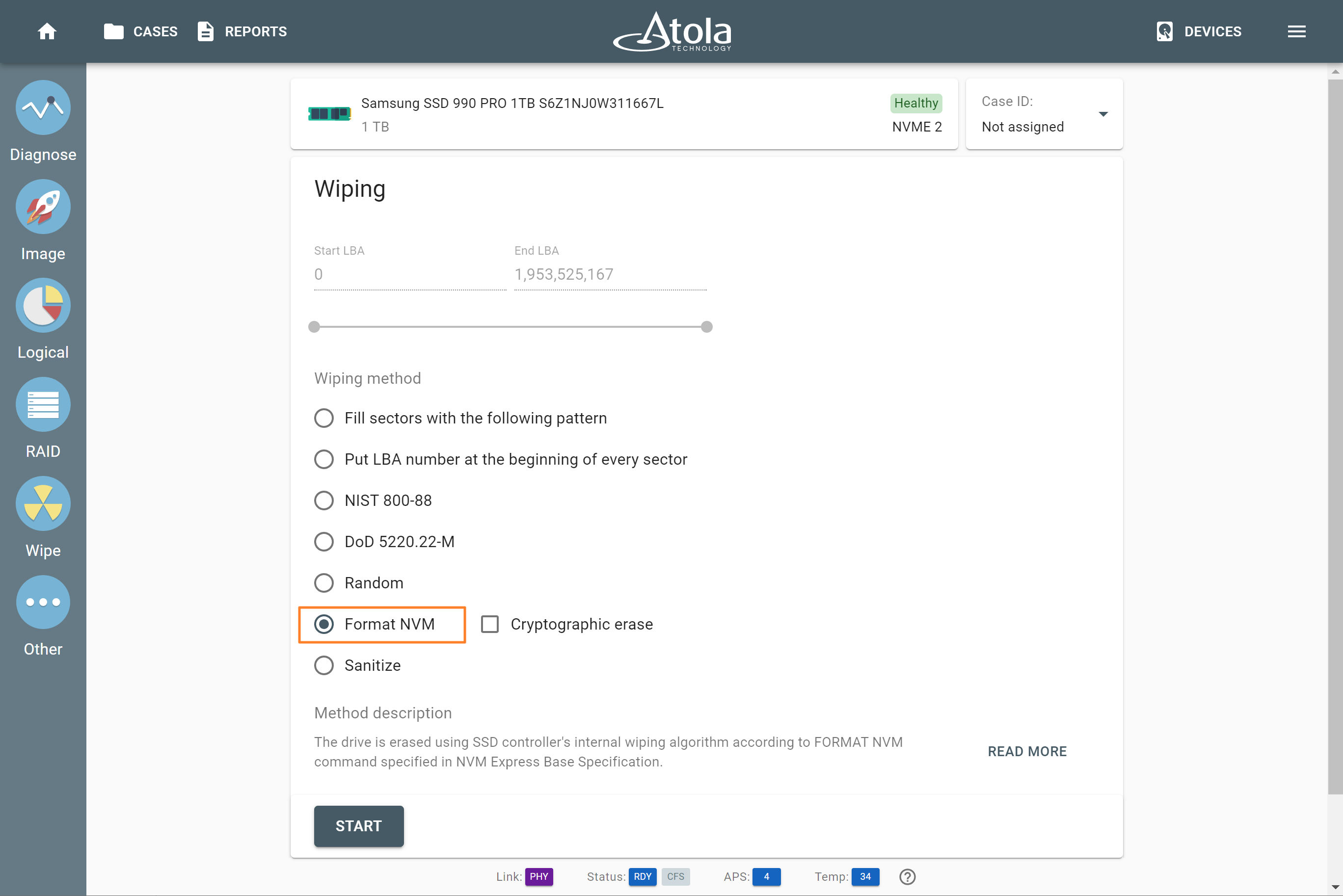

Before we begin, both source and target drives will be wiped clean on TaskForce 2 with the Format NVM command.

Variables

And what are the variables that will be changing during our experiment?

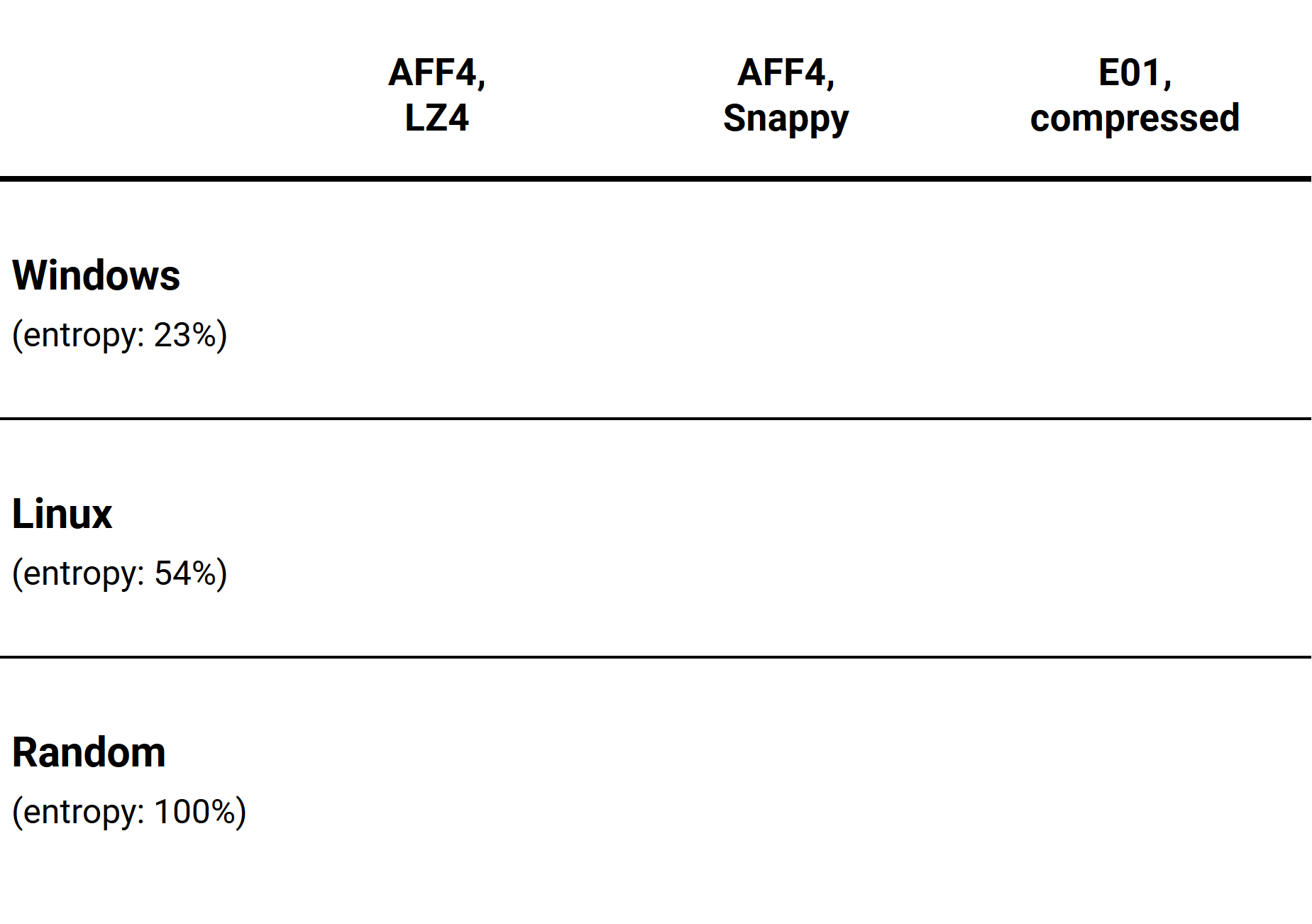

First, the source datasets, which we’ll use with our 250GB source drive. They are actually three different image files that we will write onto our source drive (not simultaneously, of course):

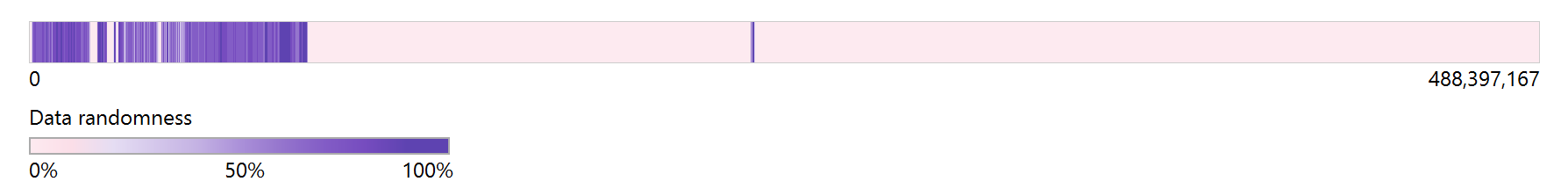

- Windows: An image of the official distributive of Windows 10 Pro 64-bit (Version 20H2). The data entropy of this image on the source drive is relatively low (around 23%).

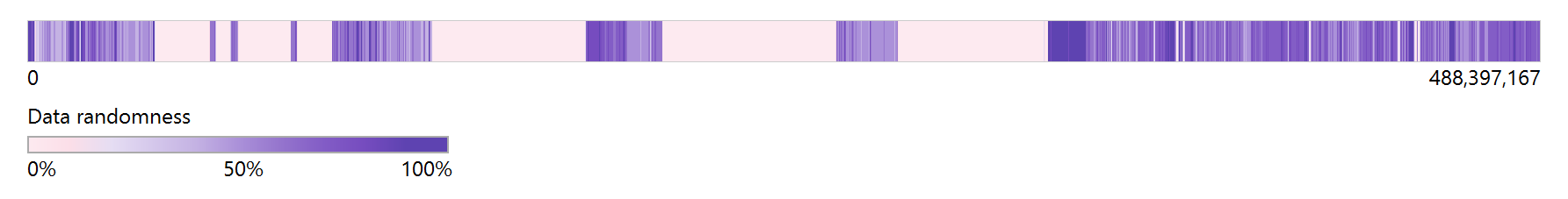

- Linux: A CentOS 8 image from one of Atola’s software engineers. The data entropy of this image on the source drive is medium (around 54%).

- Random data: The source drive is wiped on TaskForce 2 using the Random method, thus it is filled with random bytes. The data entropy of this image equals 100%. It simulates an encrypted volume such as BitLocker or a drive full of compressed files (videos, photos, archives).

Second, as a target, we will use two forensic file formats with different compression methods:

- AFF4 with LZ4 compression.

- AFF4 with Snappy compression.

- E01 with default compression.

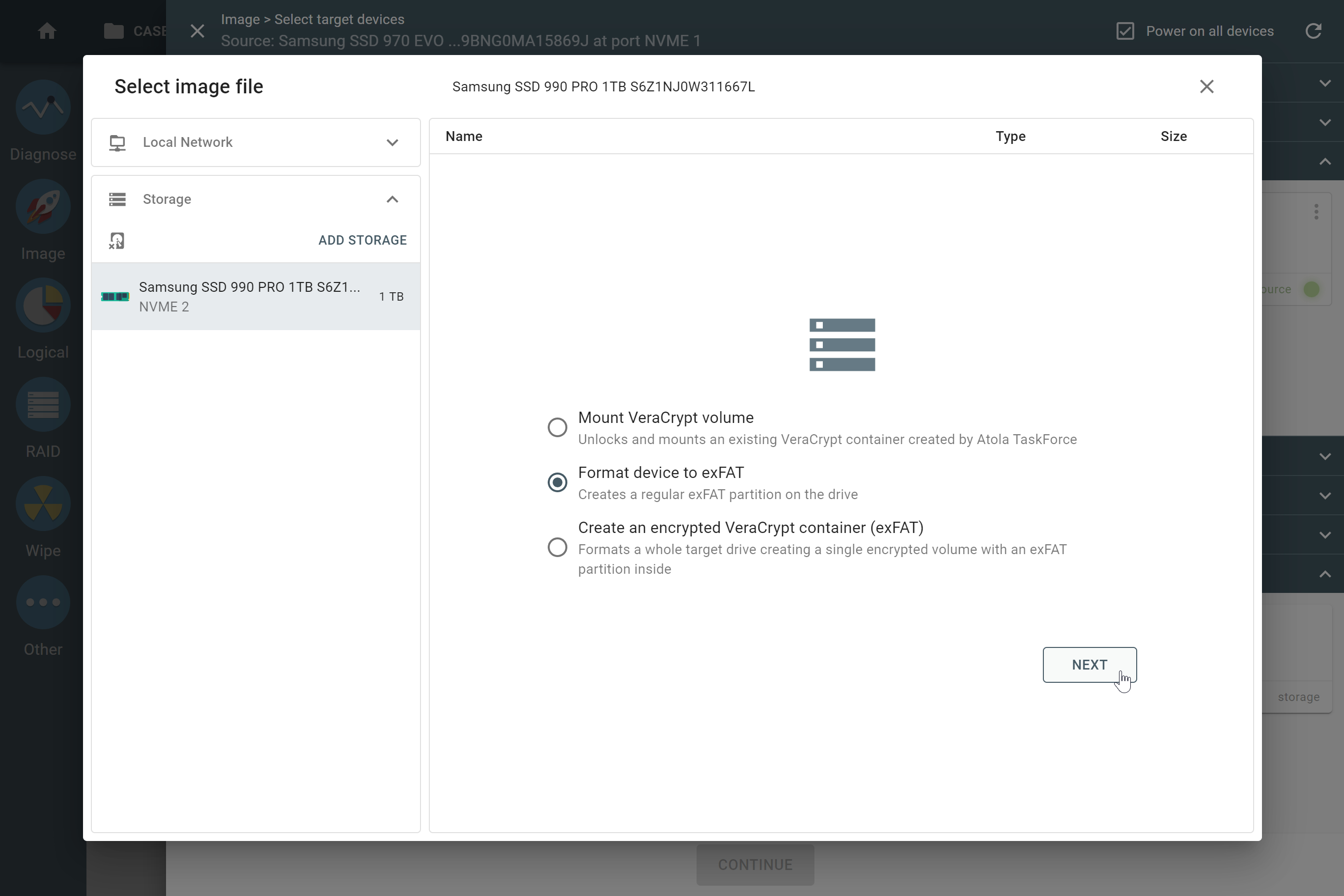

Before each measurement, a target file will be created on our target Samsung SSD 990 PRO 1TB drive, after it has been wiped with the Format NVM method and formatted as an exFAT Storage in TaskForce 2.

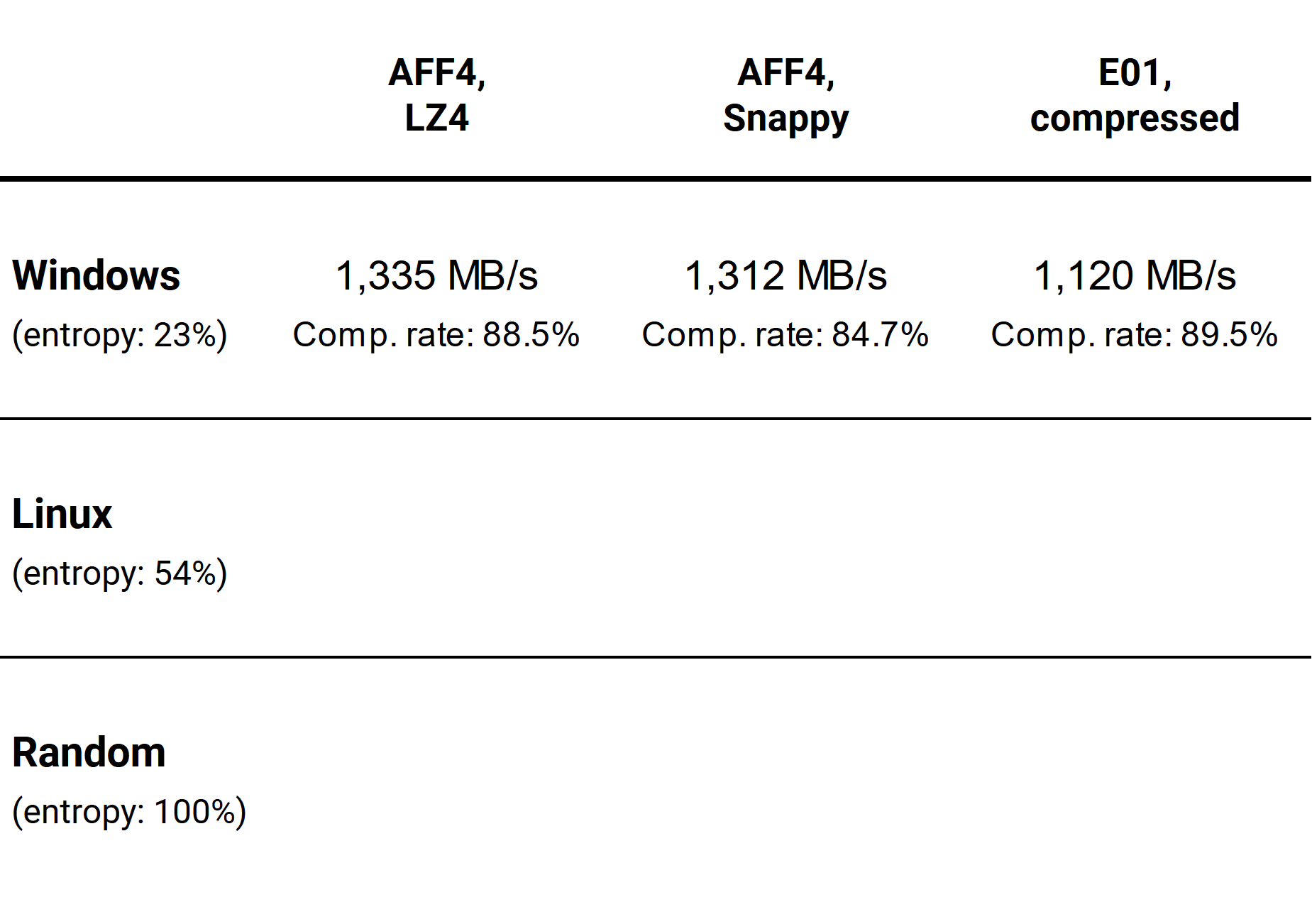

So, considering all the variables, here’s the initial table we need to fill with data:

Ready, steady, GO!

Everything is set, and we are ready to launch our speed measurements.

For each cell in our table (a combination of one of the source datasets and one of the chosen forensic file formats with compression), we will conduct three speed measurement sessions with identical settings. Then, we will calculate the average imaging speed for that combination.

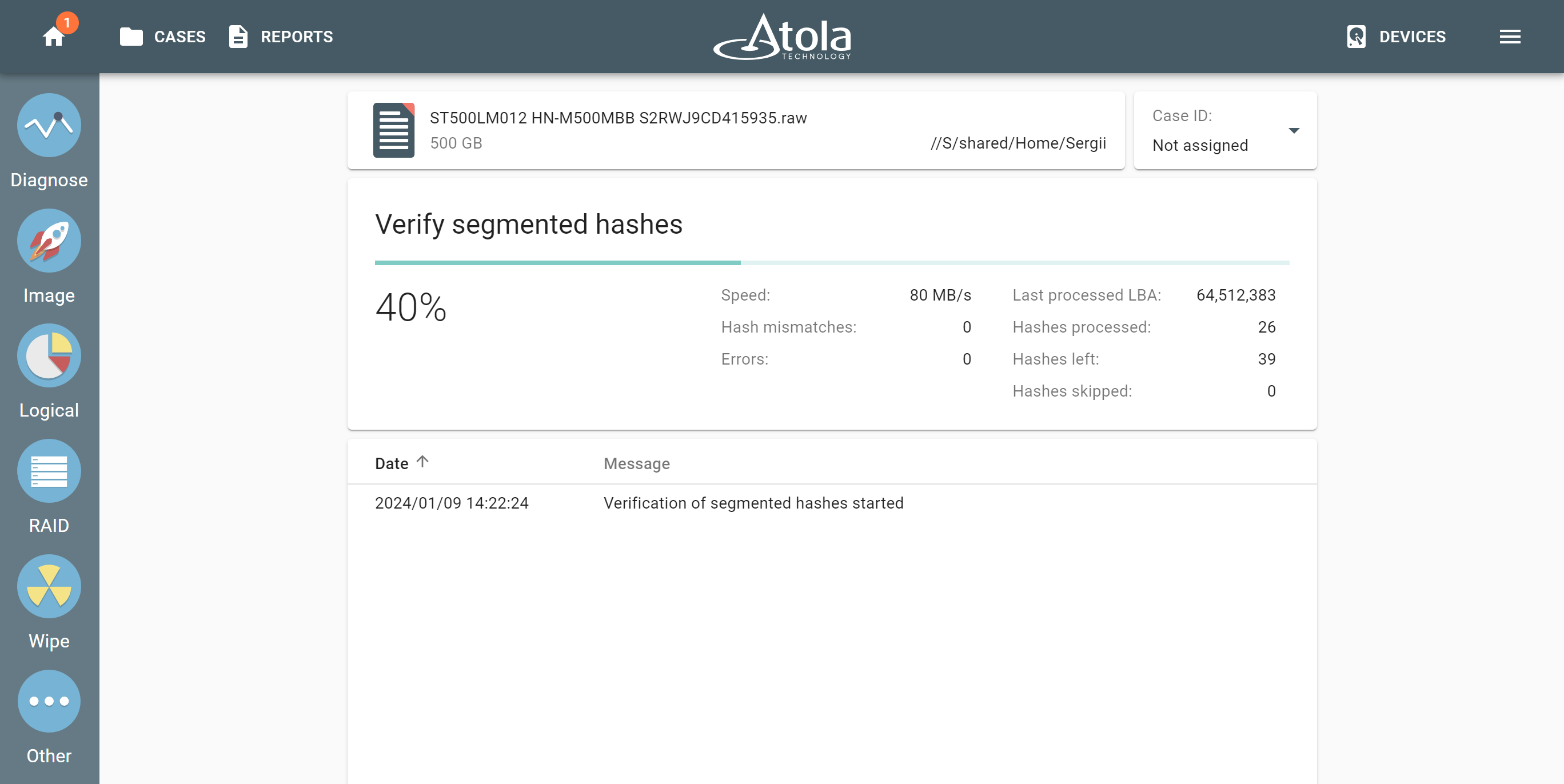

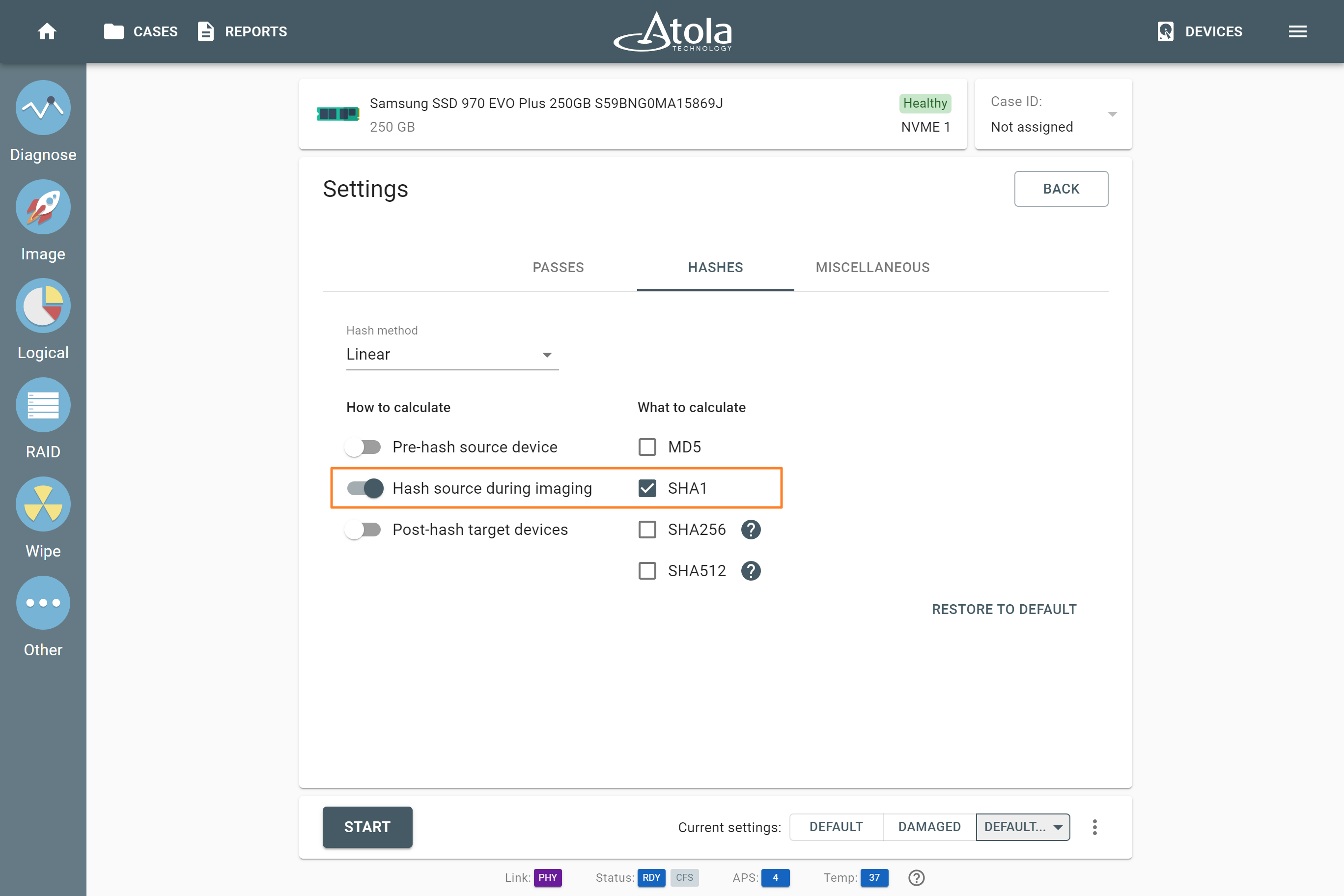

For each session, we’ll calculate the SHA1 hash during the imaging process.

After each session, we’ll save the imaging report with all the parameters, and then wipe the target storage drive using the Format NVM method to start again from a blank page.

After wiping, we’ll once again format our target drive in TaskForce 2 as Storage with a regular exFAT partition on it.

OK, let’s start with the Windows dataset, which has relatively low data entropy on our source drive:

- AFF4 with LZ4 compression. Run three times, wiping the target drive after each run. Measure average imaging speed.

- AFF4 with Snappy compression. Run, wipe, repeat, measure average.

- E01 with compression. Run, wipe, measure.

Here’s what we’ve got for this round:

As we can see, this time AFF4 with LZ4 compression shows the best result in terms of speed and performs almost as well as E01 in terms of compression rate.

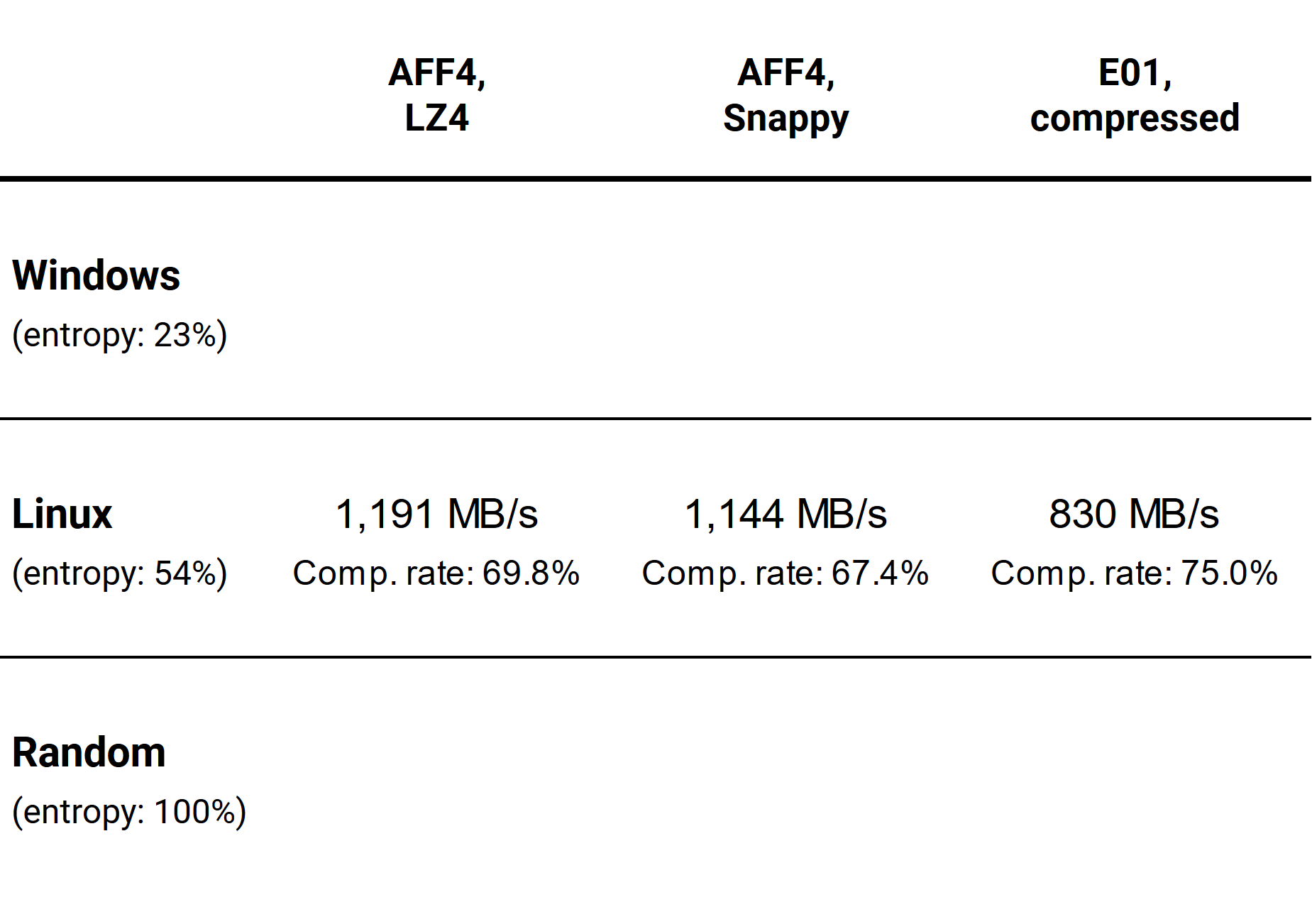

Now, let’s reset the stage by wiping our source and target drives with the Format NVM method and try the Linux dataset. You already know the drill: run three times, wiping the target drive after each run, and measure the average imaging speed.

Here are the average speeds and compression rates we’ve got for the Linux dataset with medium data entropy:

Once again, AFF4 with LZ4 compression is the fastest, slightly surpassing AFF4 with Snappy compression and leaving compressed E01 far behind. The compression rate of E01 is just slightly higher than that of AFF4 with LZ4 compression.

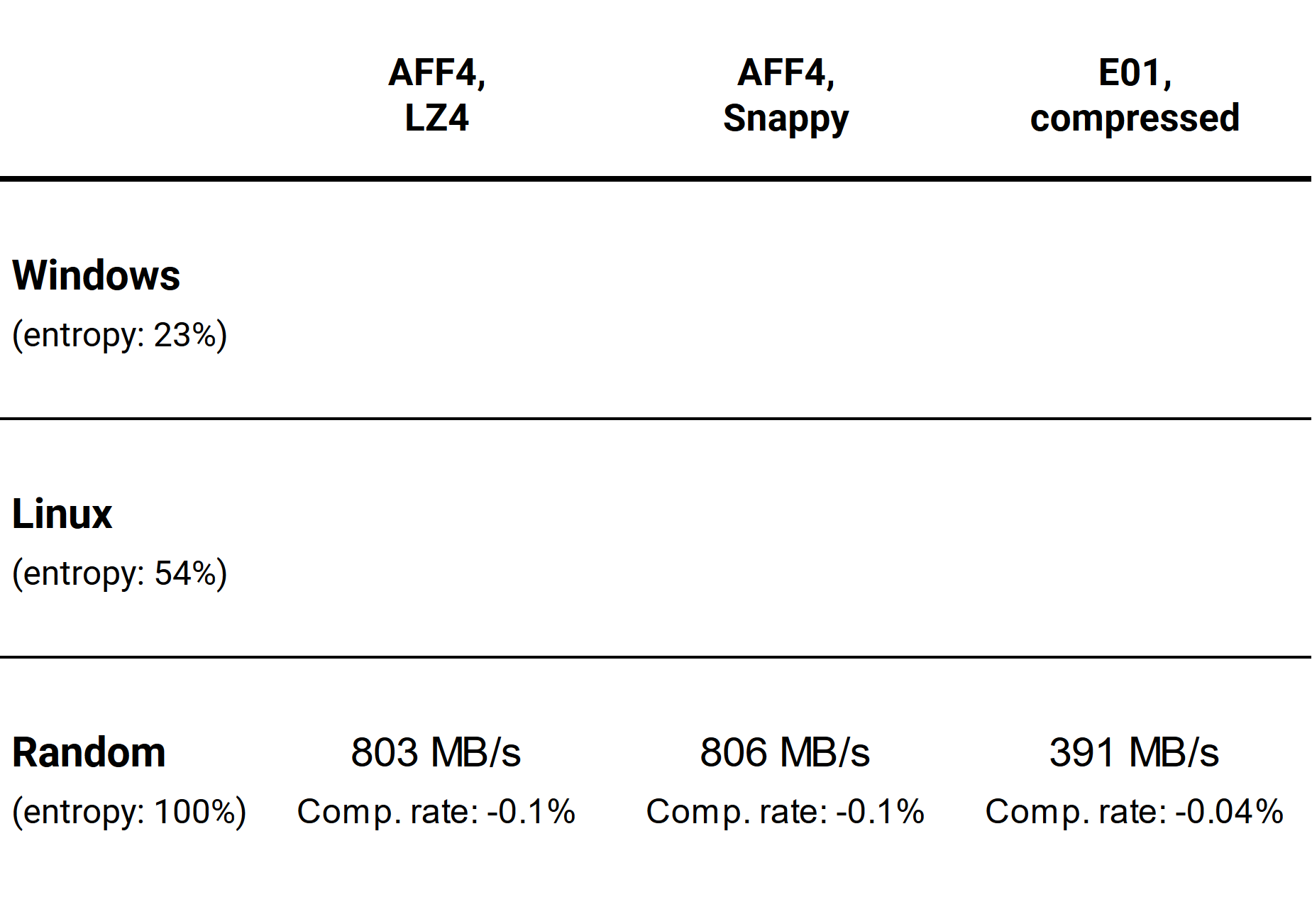

Finally, let’s overwrite sectors of the source drive with random values and repeat all the procedures.

This time, AFF4 with any compression type is two times faster than E01. Random data do not compress at all, and that is why the resulting images are even a little bit bigger than the source image.

Final results

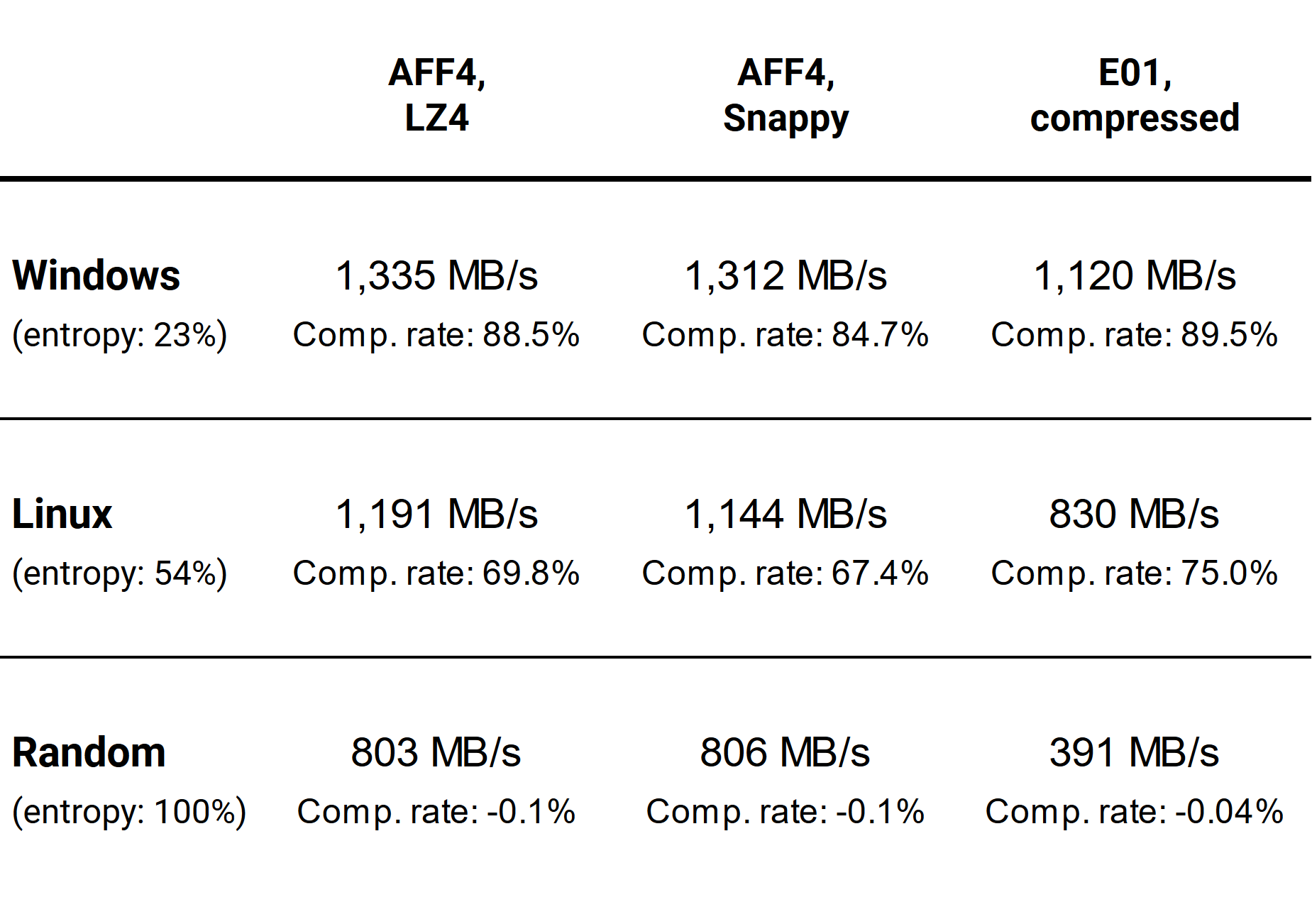

Well, let’s check our final standing, with the average imaging speeds and compression rates for each combination:

What conclusions can we draw from our exciting file format competition?

- On data with low to medium randomness, AFF4’s LZ4 and Snappy compressions provide higher imaging speeds compared to compressed E01.

- In terms of compression rate, AFF4 with LZ4 compression performs almost as well as compressed E01 for source devices with low to medium data randomness.

- As expected, random data compresses poorly, and the compression process only lowers the overall imaging speed without any benefits for saving storage space.

- Imaging drives full of compressed (photos, video, archives) or encrypted (e.g. BitLocker) data to AFF4 will be twice as fast as to E01.

- Insight Forensic 5.6 – Now with Btrfs and LVM support - December 10, 2024

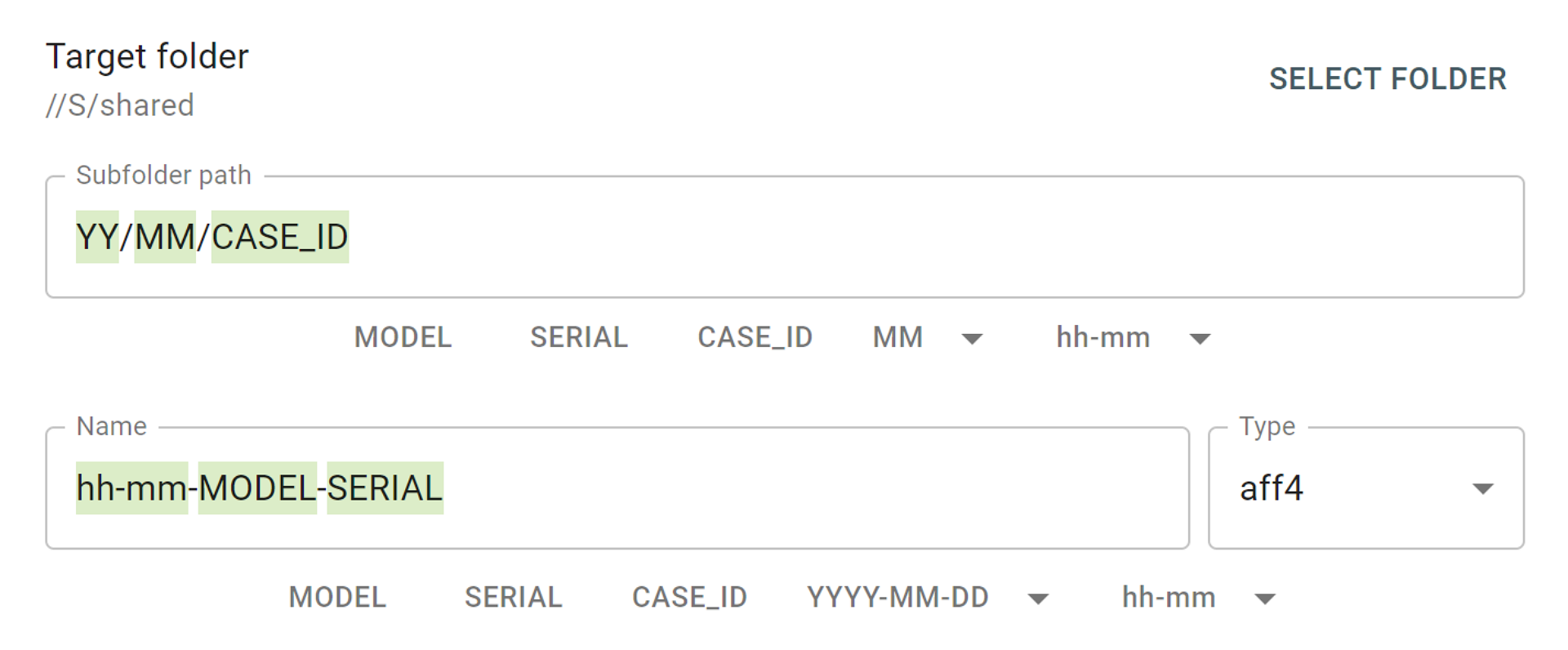

- TaskForce 2024.9 update – Templates for target files - September 26, 2024

- E01 vs AFF4: Which image format is faster? - July 9, 2024